Here are ten thoughts on AI that I personally find economically useful. Let’s go.

First: there is no AGI, there are many AGIs. That is: we empirically observe polytheistic AI (many strong models) rather than monotheistic AI (a single all-powerful model). We have many models from many factions that have all converged on similar capabilities, rather than a huge lead between the best model and the rest. So we should expect a balance of power between various human/AI fusions rather than a single dominant AGI that will turn us all into paperclips/pillars of salt.

Next, AI moves all costs to prompting and verifying. Basically, today’s AI only does tasks middle-to-middle, not end-to-end. So all the business expenditure migrates towards the edges of prompting and verifying, even as AI speeds up the middle.

AI is amplified intelligence, not artificial intelligence. Today’s AI is not truly agentic because it’s not truly independent of you. The current crop of agents can’t set complex goals, or properly verify outputs. You have to spend a lot of effort on prompting, verifying, and system integrating. That just means the smarter you are, the smarter the AI is. It’s really amplified intelligence, more than agentic intelligence.

AI doesn’t take your job, it lets you do any job. Because it allows you to be a passable UX designer, a decent SFX animator, and so on. But it doesn’t necessarily mean you can do that job well, as a specialist is often needed for polish.

AI doesn’t take your job, it takes the job of the previous AI. For example: Midjourney took Stable Diffusion’s job, and GPT-4 took GPT-3’s job. Once you have a slot in your workflow for AI image generation, AI code generation, or the like, you just allocate that spend to the latest model. Hence, AI takes the job of the previous AI.

AI is better for visuals than verbals. That is, AI is better for the frontend than the backend, and better for images/video than for text. The reason is that user interfaces and images can easily be verified by human eye, whereas huge walls of AI-generated text or code are expensive for humans to verify. See discussion with Andrej Karpathy here and here.

Killer AI is already here, and it’s called drones. And every country is pursuing it. So it’s not the image generators and chatbots one needs to worry about.

AI is probabilistic while crypto is deterministic. So crypto can constrain AI. For example, AI can break captchas, but it can’t fake onchain balances. And it can solve some equations, but not cryptographic equations. Thus, crypto is roughly what AI can’t do. See also these talks (1, 2) on how AI makes everything fake, but crypto makes it real again.

AI is empirically decentralizing rather than centralizing. Right now, AI is arguably having a decentralizing effect, because there are (a) so many AI companies and (b) there is so much more a small team can do with the right tooling, and (c) because so many high quality open source models are coming.

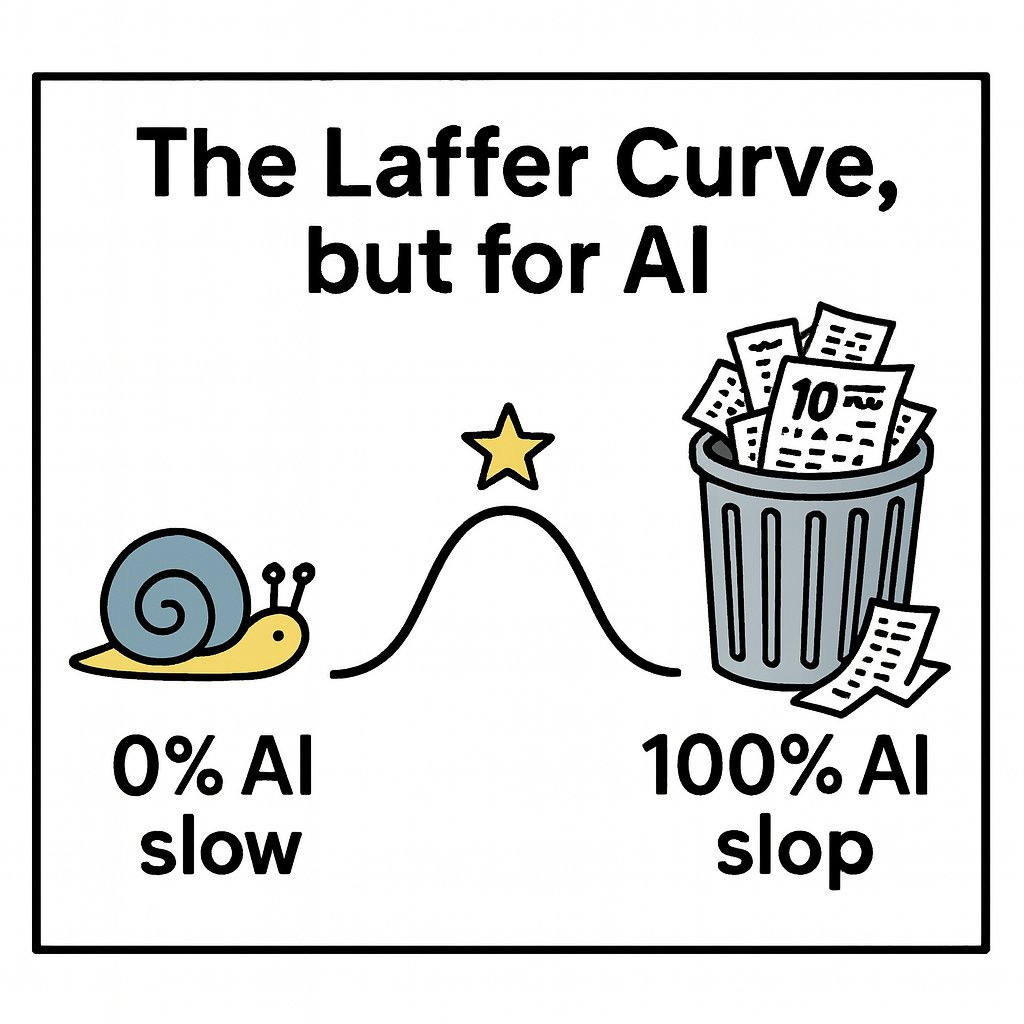

The optimal amount of AI is not 100%. After all: 0% AI is slow, but 100% AI is slop. So the optimal amount of AI is actually between 0-100%. Yes, the exact figure varies by situation, but just the idea that 0% and 100% are both suboptimal is useful. It's the Laffer Curve, but for AI:

Finally, here’s an a16z podcast with Martin Casado and Erik Torenberg where we go through all these ideas in depth!

Today’s AI is Constrained AI

Putting it all together, fundamentally, this is a model of constrained AI rather than omnipotent AI.

AI is economically constrained, because every API call is expensive and because there are so many competing models.

AI is mathematically constrained, because it (provably) can’t solve chaotic, turbulent, or cryptographic equations.

AI is practically constrained, because it has to be prompted and verified, and because it does things middle-to-middle rather than end-to-end.

AI is physically constrained, because it currently requires humans to sense context and type that in via prompts, rather than gathering all that for itself.

To be clear, it’s possible that these limitations are overcome in the future. It’s possible someone could unify the probabilistic System 1 thinking of AIs with the deterministic/logical System 2 thinking that computers have historically been very good at. But that’s an open research problem.

Some of these ideas previously appeared on X and YouTube. See comments here, here, and here. And the talks here, here, and here.

The smartest thing I've read this year on AI, notably these 3 points: "AI doesn’t take your job, it lets you do any job," "AI is better for visuals than verbals," and "Killer AI is already here, and it’s called drones."

Your polytheistic AI model is encouraging because distributed intelligence beats centralized dominance. I agree with this a lot. Just wrote about it. But you are missing the metabolic constraints that matter most. Every inference burns energy we're extracting faster than the planet can regenerate. The real question isn't whether AI will be constrained by cryptography but whether we'll design it to work with biological intelligence instead of consuming it. You called it "amplified intelligence" and I think the framing is right, but amplifying what? If we're just accelerating the same extractive logic that created our ecological crisis, we're building better tools for sophisticated collapse. The opportunity is using AI to understand mycorrhizal networks, optimize regenerative systems, and learn from the bio intelligence that's been solving complex problems for billions of years without destroying its substrate.