Yes, You May Need a Blockchain

Public blockchains are massively multiclient databases, where every user is a root user.

Originally appeared in Coindesk on May 14 2019

There’s a certain kind of developer who states that blockchains are just terrible databases. As the narrative goes, why not just use PostgreSQL for your application? It’s mature, robust, and high performance. Compared to relational databases, the skeptic claims that blockchains are just slow, clunky and expensive databases that don’t scale.

While some critiques of this critique are already out there (1, 2), I’d propose a simple one sentence rebuttal: public blockchains are massively multiclient databases, where every user is a root user. They are useful for storing shared state between users, particularly when that shared state represents valuable data that users want to export without fail — like their money.

The data export/import problem

To motivate public blockchains from the standpoint of a non-crypto engineer, take a look at the cloud diagrams for Amazon Web Services, Microsoft Azure, or Google Cloud.

There are icons for load balancers, transcoders, queues, and lambda functions. There are icons for VPCs and every type of database under the sun, including the new-ish managed blockchain services (which are distinct from public blockchains, though potentially useful in some circumstances).

What there isn’t an icon for is shared state between accounts. That is, these cloud diagrams all implicitly assume that a single entity and its employees (namely, the entity with access to the cloud root account) is the only one laying out the architecture diagram and reading from or writing to the application it underpins. More precisely, these diagrams typically assume the presence of a single economic actor, namely the entity paying the cloud bills.*

But if we visualize the cloud diagrams for not just one but one hundred corporate economic actors at a time, some immediate questions arise. Can these actors interoperate? Can their users pull their data out and bring it into other applications? And given that the users are themselves economic actors, if this data represents something of monetary value, can the users be confident that their data wasn’t modified during all this exporting and importing?

These are the types of questions that arise when we think about the data export and import from each entity’s application as a first-class requirement. And (with exceptions that we’ll get into), in general, the answer to these questions today is typically no.

No — different applications typically don’t have interoperable software, or allow their users to easily export/import their data in a standard form, or give users certainty that their data wasn’t intentionally tampered with or inadvertently corrupted during all the exporting and importing.

The reason why boils down to incentives. For most major internet services, there is simply no financial incentive to enable users to export their data, let alone to enable competitors to quickly import said data. While some call this the data portability problem, let’s call it the data export/import problem to focus attention on the specific mechanisms for export and import.

How we do data export/import today: APIs, JSON, PDF, CSV, MBOX, and/or SFTP

Even though the financial incentives aren’t yet present for a general solution to the data export/import problem, mechanisms have been created for many important special cases. These mechanisms include APIs, JSON/PDF/CSV exports, MBOX files, and (in a banking context) SFTP.

Let’s go through these in turn to understand the current state of affairs.

APIs. One of the most popular ways to export/import data is via Application Programming Interfaces, better known as APIs. Some businesses do let you get some of your data out, or give you the ability to write data to your account. But there’s a cost. First, their internal data format is typically proprietary and not an industry standard. Second, sometimes the APIs are not central to their core business and can be turned off. Third, sometimes the APIs are central to their core business and the price can be dramatically raised. In general, if you’re reading or writing to a hosted API, you’re at the mercy of the API provider. We call this platform risk, and being unceremoniously de-platformed has harmed many a startup.

JSON. Another related solution is to allow users or scripts to download JSON files, or read/write them to the aforementioned APIs. This is fine as far as it goes, but JSON is very free form and can describe virtually anything. For example, Facebook’s Graph API and LinkedIn’s REST API deal with similar things but return very different JSON results.

PDF. Another very partial solution is to allow users to export a PDF. This works for documents, as PDF is an open standard that can be read by other applications like Preview, Adobe Acrobat, Google Drive, Dropbox, and so on. But a PDF is meant to be an end product, to be read by a human. It’s not meant to be an input to any application besides a PDF viewer.

CSV. The humble comma separated value file gets closer to what we want for a general solution to the data import/export problem. Unlike the backend of a proprietary API, CSV is a standard format described by RFC 4180. Unlike JSON, which can represent almost anything, a CSV typically represents just a table. And unlike a PDF, a CSV can typically be edited locally by a user via a spreadsheet or used as machine-readable input to a local or cloud application. Because most kinds of data can be represented in a relational database, and because relational databases can usually be exported as a set of possibly gigantic CSVs, it’s also pretty general. However, CSVs are disadvantaged in a few ways. First, unlike a proprietary API, they aren’t hosted. That is, there’s no single canonical place to read or write a CSV representing (say) a record of transactions or a table of map metadata. Second, CSVs aren’t tamper resistant. If a user exports a record of transactions from service A, modifies it, and re-uploads it to service B, the second service would be none the wiser. Third, CSVs don’t have built-in integrity checks to protect against inadvertent error. For example, the columns of a CSV don’t have explicit type information, which means that a column containing the months of the year from 1-12 could have its type auto-converted upon import into a simple integer, causing confusion.

MBOX. While less well known than CSV, the MBOX format for representing collections of email messages is the closest thing out there to a standardized data structure built for import and export between major platforms and independent applications alike. Indeed, there have been papers proposing the use of MBOX in contexts outside of email. While CSV represents tabular data, MBOX represents a type of log-structured data. It’s essentially a single huge plain text file of email messages in chronological order, but can also represent images/file attachments via MIME. Like CSV, MBOX files are an open standard and can be exported, edited locally, and reimported. And like CSV, MBOX has the disadvantages of no canonical host or intrinsic data integrity check.

SFTP. Before we move on, there’s one more data export/import mechanism that deserves mention: the secure file transfer protocol, or SFTP. While quite old-fashioned, this is actually the way that individuals send ACH payments back and forth to each other. Essentially, financial institutions use SFTP servers to take in electronic transaction data in specially formatted files and transmit it to the Federal Reserve each day to sync ACH debits and credits with each other (see here, here, here, and here).

Each of these mechanisms is widely used. But they are insufficient for enabling the general case of tamper-resistant import and export of valuable data between arbitrary economic actors — whether they be corporate entities, individual users, or headless scripts. For that, we need public blockchains.

How public blockchains solve the data import/export problem

Public blockchains enable shared state by incentivizing interoperability. They convert many types of data import/export problems into a general class of shared state problems. And they do so in part by incorporating many of the best features of the mechanisms described above.

A canonical API without centralization. Public blockchains provide canonical methods for read/write access like a hosted corporate API, but without the same platform risk. No single economic actor can shut down or deny service to clients of a decentralized public blockchain like Bitcoin or Ethereum.

Lossless import/export. Public blockchains also enable individual users to export critical data to their local computer or to a new application like JSON/CSV/MBOX (either by sending out funds or exporting private keys) while providing cryptographic guarantees of data integrity.

Incentivized interoperation. Public blockchains provide a means for arbitrary economic actors (whether corporations, individual users, or programs) to seamlessly interoperate. Every economic actor who reads from a public blockchain sees the same result, and any economic actor with sufficient funds can write to a public blockchain in the same way. No account setup is necessary and no actor can be blocked from read/write access.

Cryptographic data integrity. And perhaps most importantly, public blockchains give financial incentives for interoperability and data integrity.

This last point deserves elaboration. A public blockchain like Bitcoin or Ethereum typically records the transfer of things of monetary value. This thing could be the intrinsic cryptocurrency of the chain, a token issued on top of the chain, or another kind of digital asset.

Because the data associated with a public blockchain represents something of monetary value, it finally delivers the financial incentive for interoperability. After all, any web or mobile app that wants to receive (say) BTC must honor the Bitcoin blockchain’s conventions. Indeed, the application developers would have no choice due to the fact that Bitcoin by design has a single, canonical longest proof-of-work chain with cryptographic validation of every block in that chain.

So, that’s the financial incentive for import.

As for the incentive for export, when it comes to money in particular, users demand the ability to export with complete fidelity, and very quickly. It’s not their old cat pics, which they might be ok with losing track of due to inconvenience or technical issues. It’s their money, their Bitcoin, their cryptocurrency. Any application that holds it must make it available for export when they want to withdraw it, whether that means supporting send functionality, offering private key backups, or both. If not, the application is unlikely to ever receive deposits in the first place.

So, that’s the financial incentive for export.

Thus, a public blockchain economically incentivizes every economic actor that interacts with it to use the same import/export format as every other actor, whether they be corporation, user, or program. Put another way, public blockchains are open state databases. That's the next step after open source, as they provide open access to all data. Anyone can read all the data from a public blockchain to create a block explorer, and anyone can write to a public blockchain with their own wallet. This is very different from how, say, Facebook or Twitter's database works!

Public blockchains are massively multiclient, open state databases

This is a real breakthrough. We’ve now got a reliable way to incentivize the use of shared state, to simultaneously allow millions of individuals and companies access to read from (and thousands to write to) the same data store while enforcing a common standard and maintaining high confidence in the integrity of the data.

This is very different from the status quo. You typically don’t share the root password to your database on the internet, because a database that allows anyone to read/write to it usually gets corrupted. Public blockchains solve this problem with cryptography rather than permissions, greatly increasing the number of simultaneous users.

In other words, public blockchains are massively multiclient open state databases where every user is a root user.

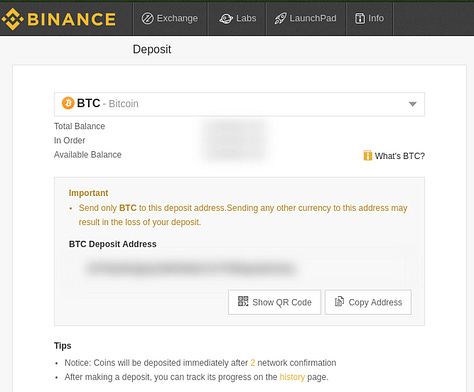

One way to understand this viscerally is to look at btc.com, blockchair.com, and blockcypher.com – and realize that all of them are simultaneously reading from the same Bitcoin blockchain. And then look at coinbase.com, binance.com, and kraken.com to realize that all of them are likewise simultaneously reading and writing transactions to the same Bitcoin blockchain. And finally to look at miners like AntPool, F2Pool, and ViaBTC and realize that all of them are reading and writing blocks to the same Bitcoin blockchain.

Any user with an internet connection can read. Anyone with some BTC can write a transaction. And anyone with sufficient compute power can mine a Bitcoin block. That's what a public blockchain means: every user is a root user.

1. Any user can read all transactions

2. Any user with BTC can write transactions

3. Any user with compute can write blocks

It’s true that today public blockchains are typically focused on monetary and financial applications, where the underlying dataset represents an append-only transaction history with immutable records. That does limit their generality, in terms of addressing all the different versions of the data import/export problem. But there’s ongoing development on public blockchain versions of things like OpenStreetMaps, Wikipedia, and Twitter as well as systems like Filecoin/IPFS. These wouldn’t just represent records of financial transactions where immutability was a requirement, but could represent other types of data (like map or encyclopedia entries) that would be routinely updated.

Done right, these newer types of public blockchain-based systems may allow any economic actor with sufficient funds and/or cryptographic credentials to not just read and write but also edit their own records while preserving data integrity. Given this capability, there’s no reason one can’t put a SQL layer on top of a public blockchain to work with the shared state it affords, just like an old-fashioned relational database. This results in a new type of database with no privileged owner, where all seven billion humans on the planet (and their scripts!) are authorized users, and which can be written to by any entity with sufficient funds.

This would have powerful second order consequences. Crawling public blockchains ("chain crawling") is easier than web crawling, because websites have robots.txt files that prevent the hoi polloi from indexing them. But if more data is stored on public blockchains, then more novel services can be built on top of these open state databases. Indeed, this provides a long-term vision for opening up the backends of social networks and search engines in a privacy-protecting and data-integrity-preserving way.

That day isn’t here yet. It remains to see how far we can push the use cases for public chains. And scaling challenges abound. But hopefully, it’s clear that while public blockchains are indeed a new kind of database, they offer something quite different than what a traditional database offers. They're a database where every user is a root user.

* One exception is the so-called “Requester Pays” feature that Amazon and other cloud services offer. This is a cool feature that lets someone pay to write to your S3 bucket. But it’s permissioned – it still requires every would-be writer to open an AWS account, and the bucket owner has to be willing to let them all write to their bucket, so there’s still a single distinguished owner.