Prompts are Tiny Programs

And prompt engineering is a subset of software engineering.

I may turn more popular Twitter threads into Substack articles, to make them easier to find, even if I don’t always mail them out. Towards that end, here’s a bit of AI posting1 to interrupt your regular crypto/politics posting.

We’re now about 18 months into the AI revolution. One thing that was uncertain in late 2022 was whether prompt engineering would be around to stay, or whether better AI would quickly obviate it.

I now think it’s around to stay and I have an explanation that makes sense to me, at least: prompt engineering is just a subset of software engineering.

That is, prompts are tiny programs written in natural language. But the API isn’t specified and varies between models. So guessing the right “function calls” with clever use of vocabulary is a huge part of the game. On the other hand, even if you don’t guess *exactly* the right words to use, the model will often do what you mean.

This is different from how we normally think of an API, which is both more legible and more fragile. The exact words to make an API do what you want are written down, but if you don’t say those exact words it won’t do what you want.

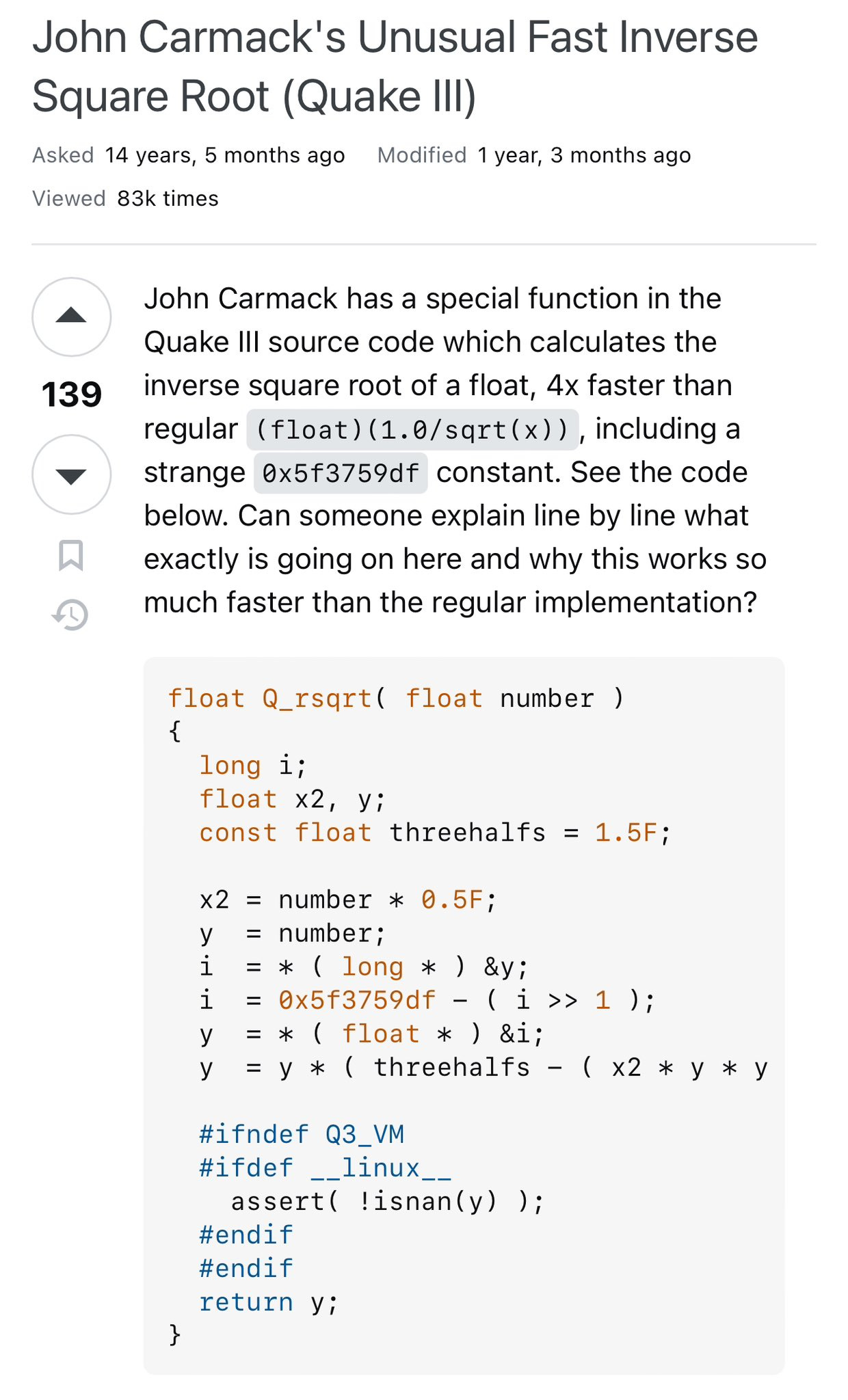

Even given this difference, the concept of prompts as tiny programs using hidden APIs helps explain the bizarre magic associated with specific phrases. I’m reminded of Quake3’s fast inverse square root, which has a famously obscure incantation in C that just so happened to deliver a 4X speedup.

More code now looks like that, and it makes sense. C is how you talk to machines and English is how you talk to humans. So, just like you write part of a large application in C for performance, you’ll also write part of it in English for dealing with unstructured data.

You can go further with this analogy. Once you think of prompts as code, you can probably generate model-aware syntax highlighters for favored keywords. You can maybe automatically generate API-like docs from a model for the most common use cases.

And you can think of every new model you add to your codebase as roughly analogous to adding a new programming language — because just as it takes time for someone to ramp up on the idioms of Rust, they’ll need to play around with the latest Mistral to get the hang of how to talk to it.

Anyway — this is all probably obvious to folks spending 100% of their time in the field, and is similar to some of the things Karpathy has posted about, but at least for me it was a useful articulation of why prompts are around to stay: prompts are tiny programs.

Postscript

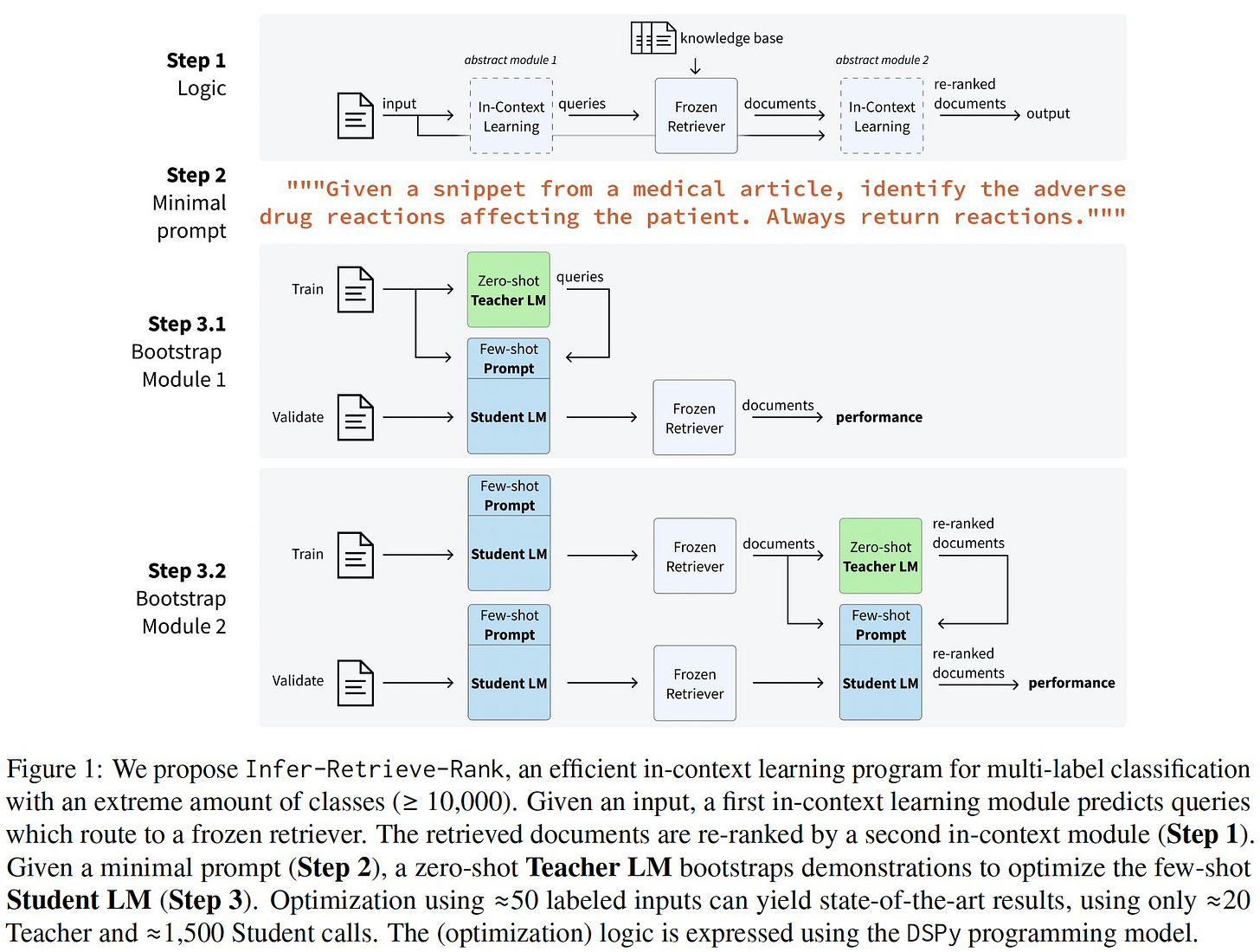

The (now routine!) presence of a prompt in the middle of a pipeline that achieves state-of-the-art (as in this study) is what “prompted” this post.

The first reaction with prompts is that really relying on them in your codebase as much as you do source code will be kind of hacky and fragile…but it’s fine *if* the output of that part of the code can tolerate statistical noise.

To reduce the noise a bit, we probably want something like pip’s version freezing for prompts, where the exact model and version number is always included alongside the prompt in some form.

The DSPy library from this tweet is one way of using prompts in programs more robustly, by abstracting away the low-level strings and turning them into explicit function calls. Pretty interesting.

Also, just to pre-empt people who’d say “what, you’re an AI expert now too?!?”…I wouldn’t call myself an AI expert at all, anymore than I’d call myself a crypto expert. Maybe only a few folks like Greg Brockman or Yann LeCun really deserve the title of “AI expert.” But I was teaching neural networks at Stanford in 2007 back when that meant something. And I spent years building commercially valuable systems in machine learning, robotics, and genomics before joining a16z and Coinbase. So I have a reasonable facility with the space, and I post in order to learn and think things through.